The Old Grid used to be relatively simple, with generation following load:

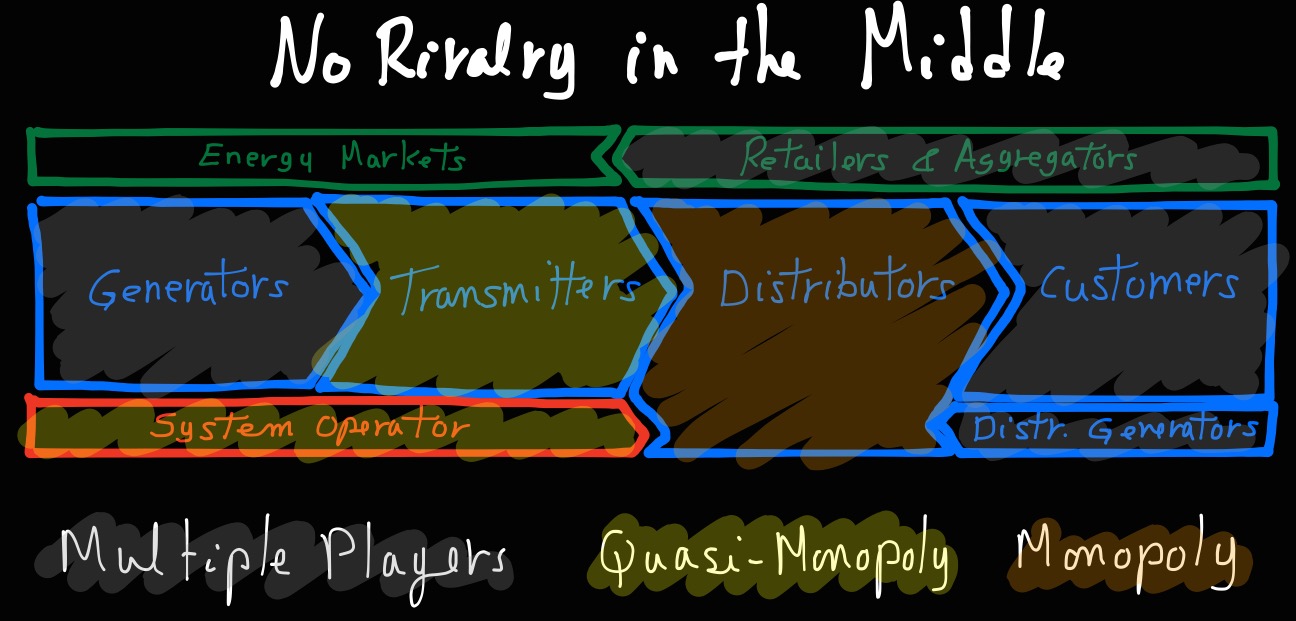

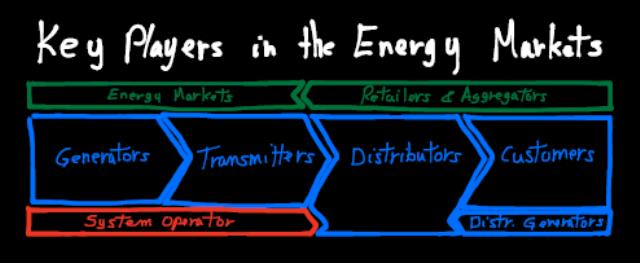

It is now a lot more complicated:

The grid is transforming and getting more complicated.

- We are decommissioning fossil plants to reduce GHG emission and nuclear plants because of safety concerns.

- There is only so many rivers, so the solution of building new hydro plants is not sufficient.

- We are then replacing fossil and nuclear base load plants with renewables that are intermittent.

- To compound the problem of balancing the grid, loads are also becoming peakier, with reduced load factor. Interestingly, many energy conservation initiatives actually increase power peaks.

- To connect the new renewable generation, we then need to build more transmission. The transmission network also allows network operators to spread generation and load over more customers – geographic spread helps smooth out generation and load.

- Building new transmission lines face local opposition and takes a decade. The only other alternatives to balance the grid are storage … and Demand Management.

- Another issue is that we are far more dependent on the grid that we used to be. With electrical cars, an outage during the night may mean that you can’t go to work in the morning. So, we see more and more attention to resiliency, with faster distribution restoration using networked distribution feeders as well as microgrids for critical loads during sustained outages.

- Renewable generation and storage can more effectively be distributed to the distribution network, although small scale generation and storage are much more expansive than community generation and storage.

- With distributed generation, distributed storage and a networked distribution grid, energy flow on the distribution grid becomes two-way. This requires additional investments into the distribution grid and a new attention to electrical protection (remember the screwdriver).

All of this costs money and forces the utilities to adopt new technologies at a pace that has not been seen in a hundred years. The new technology is expensive, and renewable generation, combined with the cost of storage, increases energy costs. There is increasing attention to reduction of operating costs and optimization of assets.